TL;DR: You can now disable deep link crawling to have more control of the specific URLs to include in your site validation report.

Photo by Alex Eckermann on Unsplash

Rocket Validator is basically an automated web crawler that assists you in validating HTML and accessibility on large sites. Instead of manually checking each individual web page, you can just enter a starting URL (typically, the front page) and we’ll automatically discover the rest of the internal web pages following the links.

That’s as simple as it gets, you don’t need to submit a long list of URLs, just give a starting web page and we’ll discover the rest of the site. To do that, we use a crawler - also known as a web spider. It basically works like this:

- You submit a starting url, https://example.com

- The crawler visits that page and finds 3 internal links:

- /features

- /blog

- /pricing

- The crawler then adds these 3 web pages to the report (and HTML + accessibility checking starts in other background jobs).

- Then each of these web pages is also visited in search for more internal links. That’s what we call deep crawling.

- More web pages are discovered for each branch, for example:

- /features

- /features/awesome

- /features/fantastic

- /blog

- /blog/2021

- /blog/2020

- /blog/2019

- /blog/tags

- /pricing

- /pricing/basic

- /pricing/pro

- /pricing/premium

- Deep crawling continues, searching for more links on the discovered web pages, until we reach the maximum number of web pages requested, or we don’t find any more internal links.

The pros and cons of deep crawling

Deep crawling works great in the majority of cases, where you want to validate a small or medium web site. If you know that your site is about 300 web pages, you can request a report to cover up to 500 web pages, and your whole site will be discovered by our web crawler.

The thing is, there’s no guarantee on the discover order. The order in which the web pages on your site will be discovered by our web spider depends on different factors like response times, rate limits, or redirections. If you have a small or medium web site this doesn’t really matter, because your whole site will be discovered in the end, following a different path on each crawl.

Now, what happens on a large site crawl?

Rocket Validator has a limit of 5,000 web pages per report, but you can use XML or plain text sitemaps to organize the web pages in reports. An XML or text sitemap is basically a list of the URLs on your site, commonly used to make the job easier for search engines.

When you use a sitemap with the URLs of your internal web pages, you’re making it much easier (and faster!) for our crawler to discover your site. Basically, our web spiders won’t need to discover your site because you’re explicitly telling us the exact URLs to validate. It’s in those cases when disabling deep crawling is a good option because otherwise, additional web pages may be discovered via deep crawling, which may be confusing.

So in a nutshell:

- If you have an exact list of URLs to validate and you don’t want extra web pages to be discovered from those, then disable deep crawling in your reports.

- If you are only indicating some URLs to begin with and you’d like this to be automatically expanded by our web spiders, then enable deep crawling in your reports.

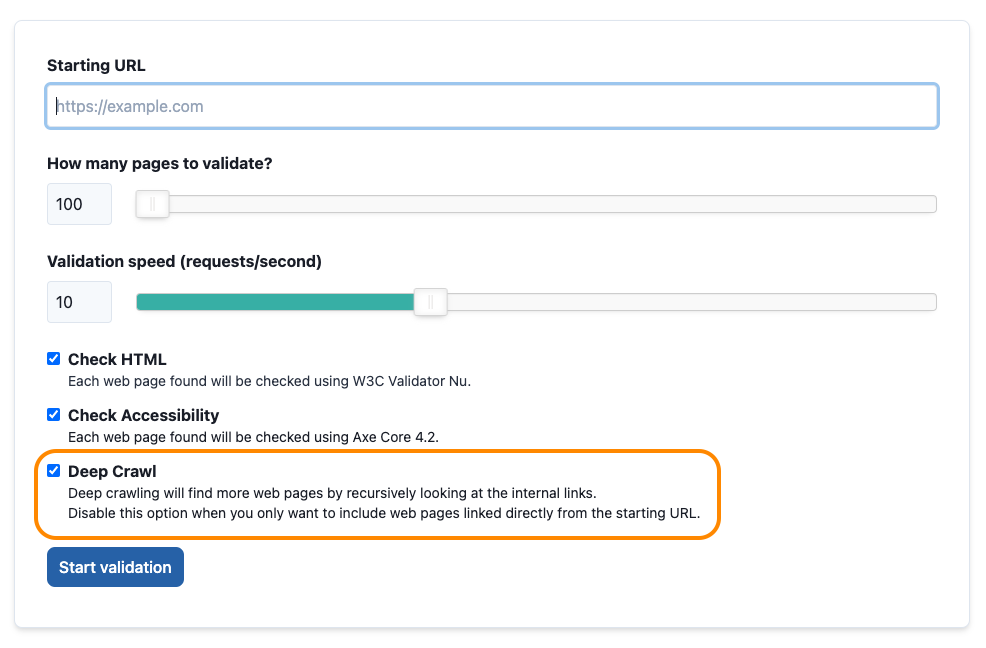

To do that, just use the new Deep Crawl checkbox in the Report and Schedule forms: