TL;DR the new Initial URLs feature lets you manually specify the web pages to include initially in your report. Combine this with the Exclusions and Deep Crawling options to customize the web crawler behavior to your needs.

Rocket Validator provides the simplest approach to generating site-wide validation reports. All you need to do is enter a Starting URL, typically the home page and our fully automated web crawler will find the internal links from there and check each web page using the W3C HTML Validator and the Axe Core accessibility checker.

While our crawler will automatically find internal links in your sites by following them from the Starting URL, there are times when you need more control over the exact web pages included in the report, for example, you may want to ensure that certain pages are included, or that some section is entirely excluded. Let’s explore the advanced options that you can use to have more control over the web pages included in your site validation reports.

Using an XML (or TXT) sitemap

As explained in Sitemaps.org:

Sitemaps are an easy way for webmasters to inform search engines about pages on their sites that are available for crawling. In its simplest form, a Sitemap is an XML file that lists URLs for a site along with additional metadata about each URL (when it was last updated, how often it usually changes, and how important it is, relative to other URLs in the site) so that search engines can more intelligently crawl the site.

Web crawlers usually discover pages from links within the site and from other sites. Sitemaps supplement this data to allow crawlers that support Sitemaps to pick up all URLs in the Sitemap and learn about those URLs using the associated metadata. Using the Sitemap protocol does not guarantee that web pages are included in search engines, but provides hints for web crawlers to do a better job of crawling your site.

Rocket Validator accepts XML and TXT sitemaps, as long as the sitemap file is hosted in the same subdomain as the web pages. Using a sitemap is often the most convenient option as you probably have already a sitemap in your site for SEO purposes, but there are times when that’s not an option because you don’t have access to the server, or you don’t want to take the extra effort to build a sitemap. That’s where our advanced crawling options for Initial URLs and Exclusions come in, but let’s first talk about Deep Crawling.

Deep Crawling

When Rocket Validator starts generating your site validation report, it visits your Starting URL (which can be an HTML page or an XML / TXT sitemap), looks for the linked internal web pages (those in the same subdomain), and adds them to the site report.

This process is then repeated for each web page added to the report, so that the crawler recursively finds new internal links. That’s what we call Deep Crawling and that’s how we can discover thousands of web pages in your sites by following internal links.

Deep Crawling is enabled by default, but as a Pro user, you can disable this behavior using the Advanced Crawling options for cases where you want more control over the exact web pages that you want to include in your report. Check out this blog post for more details about Deep Crawling.

Initial URLs

Internal links discovery from the Starting URL is the simplest approach to generating a site report, but there are times when you want to specify the exact web pages to be included and you can’t easily craft an XML sitemap.

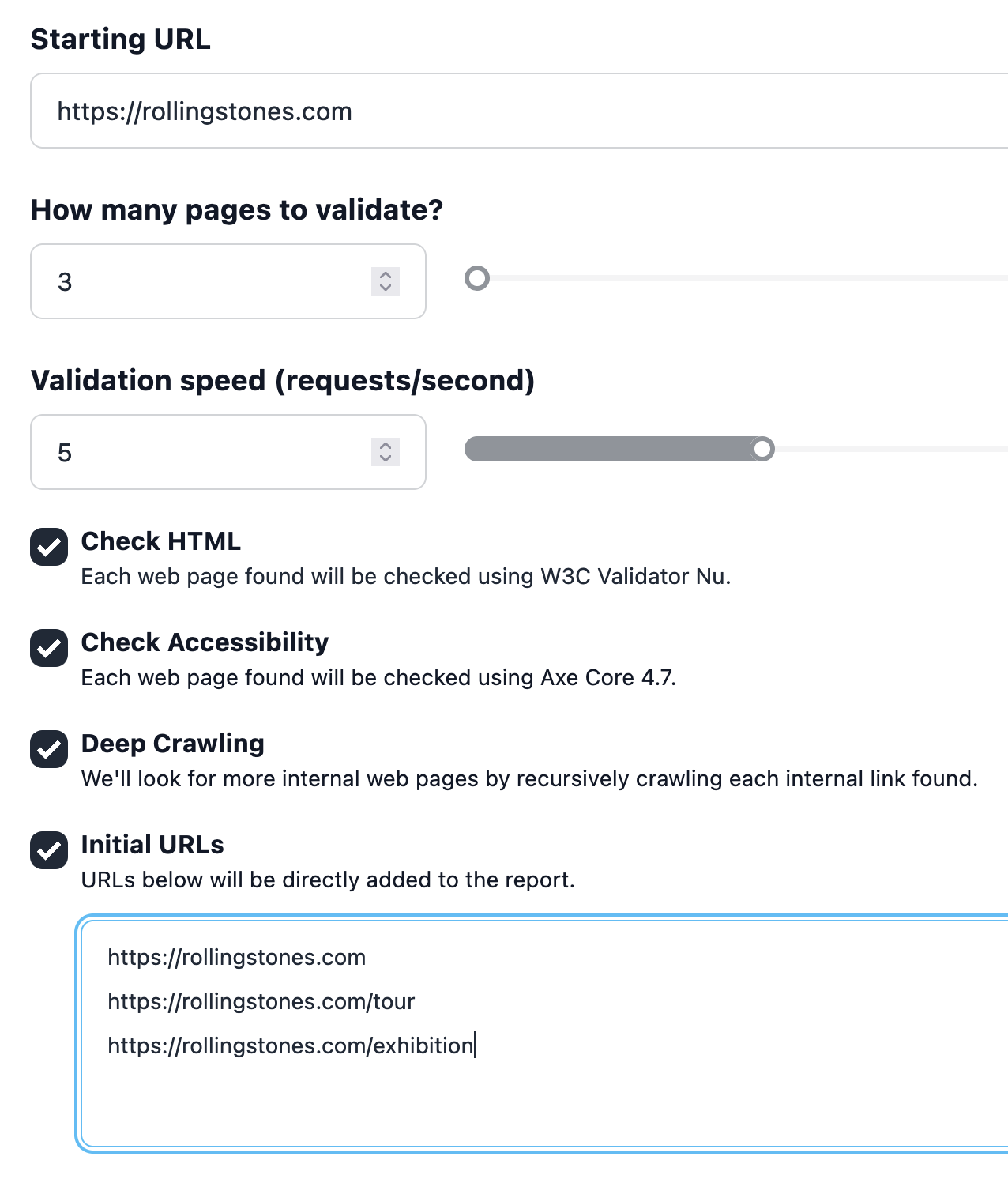

The new Initial URLs advanced option is here to solve this. You can now specify the exact list of URLs to be included in the report, so our crawler will add them directly on the first pass. The new field is a text area that accepts a list of URLs, one per line, where each URL must be absolute and internal regarding the Starting URL field:

Our web crawler will take these initial URLs and add them to the site report. After that, if Deep Crawling is enabled, it will recursively follow them to add more web pages. If it’s disabled, it will stop there.

Excluding URLs

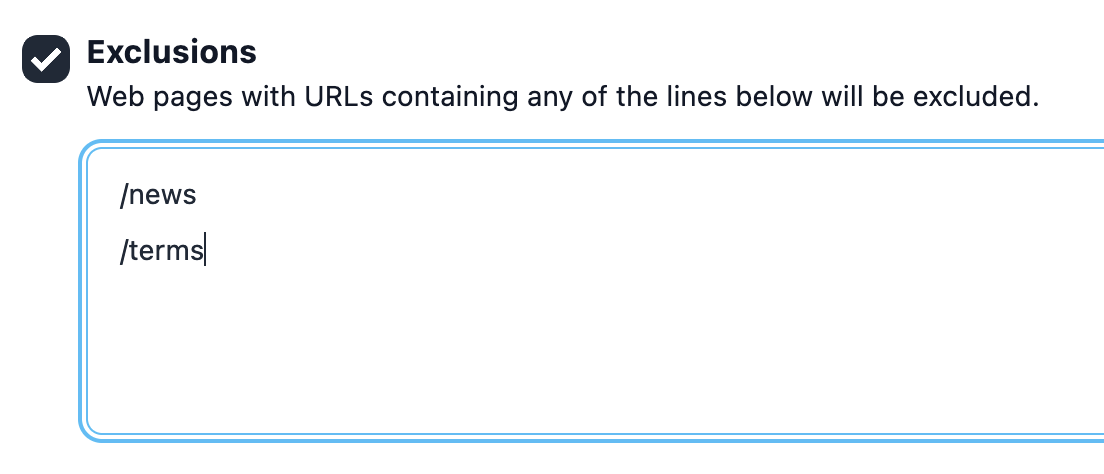

In case you want to skip some URLs or even whole sections of your site, you can use the new Exclusions feature. Just enter some URLs or paths, one per line, and URLs containing any of them will be excluded from your site validation report. More about Exclusions in this blog post.

Combining Advanced Crawling Options

The new Advanced Crawling controls give you a lot of flexibility to define how you’d like our web crawler to behave:

- Use the default options to let our web crawler automatically discover the internal web pages from a Starting URL.

- Enter an XML or TXT sitemap to define a specific list of URLs to be validated, and disable Deep Crawling to just validate the ones in that list.

- Define Initial URLs when you don’t have access to XML sitemaps, and either leave Deep Crawling enabled to add the linked web pages, or disable it to restrict it to the initial list.

- Specify exclusions to skip specific sections of your site.

We hope the Advanced Crawling Options give you a new level of control in your site validation reports!